A new generation of 3D reconstruction methods has emerged with the rise of Neural Radiance Fields, or NeRFs. A transformation of how computers understand and reproduce physical spaces is now underway because NeRFs learn to model light and geometry directly from ordinary images. A brief explanation of their impact shows why NeRFs are becoming central to graphics, robotics, gaming, and virtual reality.

Table of Contents

A New Approach to 3D Reconstruction

Traditional 3D reconstruction systems rely on:

- Point clouds

- Mesh extraction

- Multi-view stereo

- Photogrammetry

These methods often struggle with:

- Complex lighting

- Semi-transparent objects

- Fine textures

- Limited viewpoints

NeRFs change this completely.

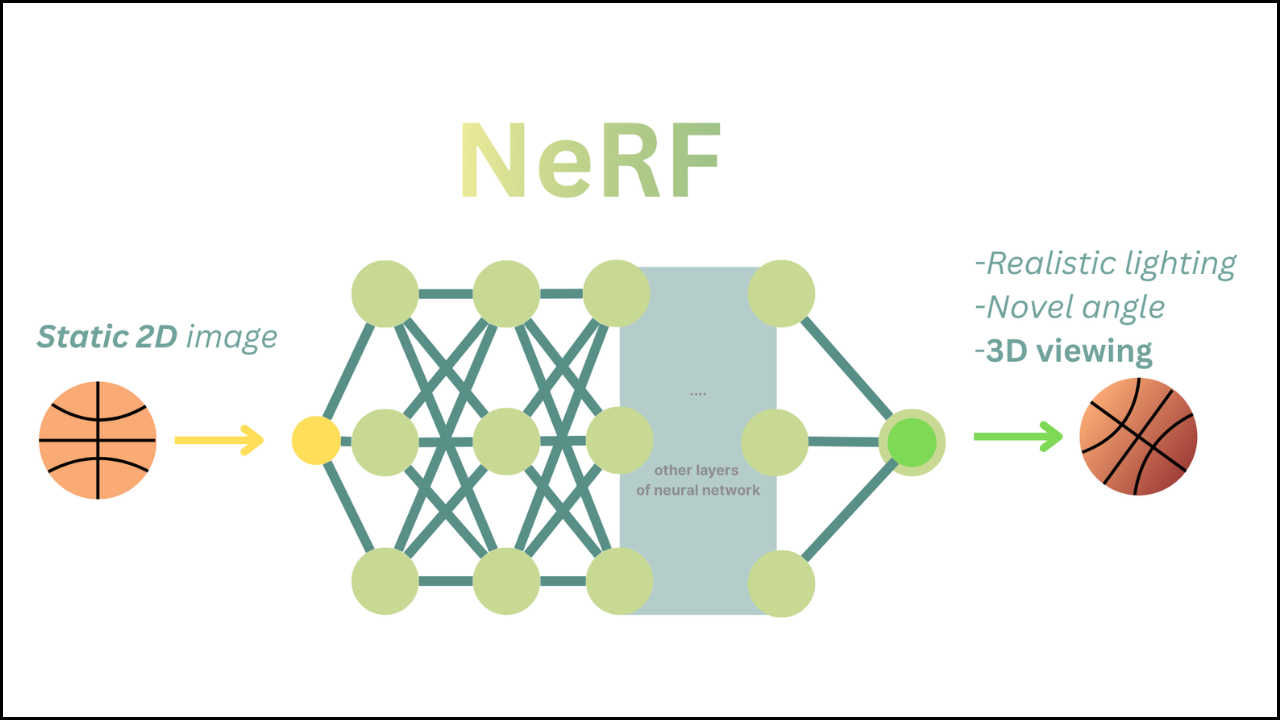

NeRF-based reconstruction treats the scene as a continuous radiance field, meaning the model learns:

- How much light each point emits

- What color does it have

- How dense it is

- How light behaves through space

This allows NeRFs to rebuild scenes with a level of smoothness, realism, and detail that older methods cannot easily achieve.

Why NeRFs Are a Breakthrough

1. Realistic Lighting and Shadows

- NeRFs naturally model soft shadows, reflections, and indirect lighting.

- Traditional methods fail when lighting is uneven or dramatic.

2. Smooth and Continuous Surfaces

- NeRFs do not depend on polygons or voxels.

- Geometry is learned continuously, producing smoother edges and cleaner surfaces.

3. Minimal Input Requirements

- NeRFs only need a set of images with camera poses.

- No depth sensors, laser scans, or special equipment required.

4. New View Synthesis

- NeRFs can render views from angles never captured in the original photos.

- This ability is crucial for VR, filmmaking, and simulation.

How NeRFs Work Behind the Scenes

1. Scene as a Field

NeRFs model scenes as functions that map:

- 3D position

- Viewing direction

to - Color

- Density

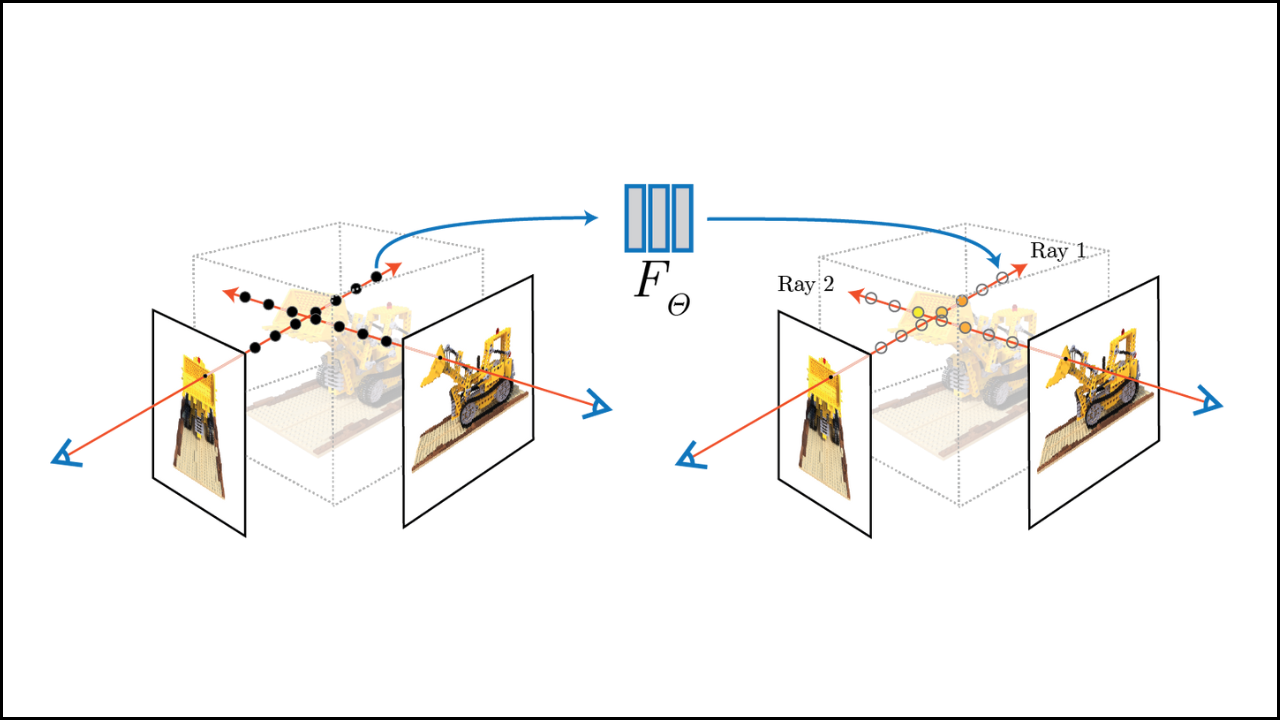

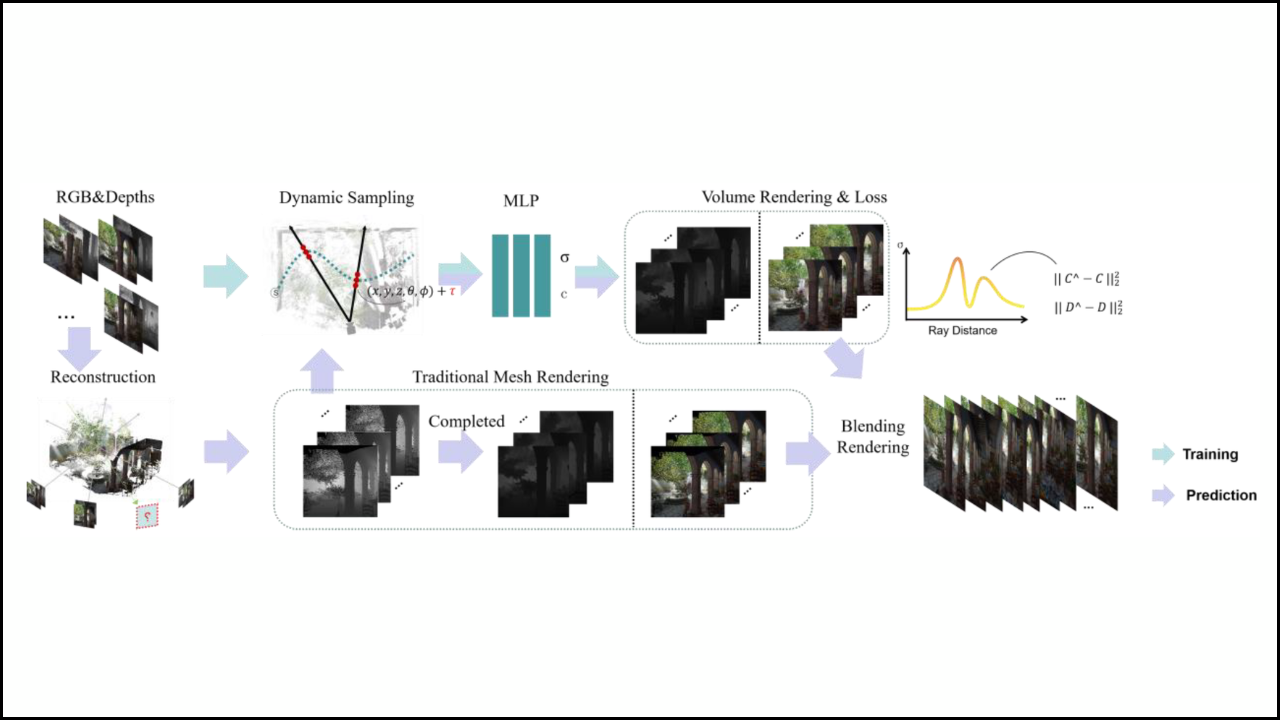

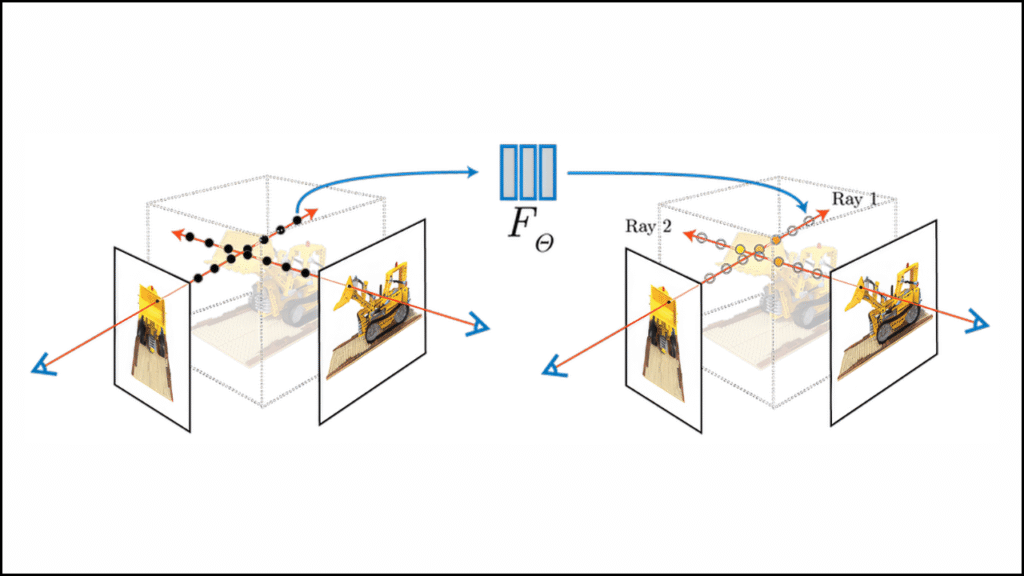

2. Ray Sampling

The system sends rays into the scene and samples multiple points along each ray.

3. Neural Network Prediction

The NeRF predicts:

- What color should each point emit

- Whether the point blocks light (density)

4. Volumetric Rendering

These predictions combine mathematically to produce the final pixel color.

This process mimics how light travels in the real world.

Impact on Real-World Applications

1. Virtual Reality and Augmented Reality

- NeRFs generate highly realistic environments.

- Users can interact with spaces reconstructed from simple photos.

2. Robotics and Autonomous Navigation

- Robots gain an accurate 3D understanding of their surroundings.

- Smooth surfaces and lighting-aware models improve navigation and planning.

3. Movies and Visual Effects

- Filmmakers reconstruct sets or locations in 3D without needing heavy scanning equipment.

- NeRFs allow digital cameras to “move” through scenes after filming.

4. Cultural Heritage Preservation

- Monuments and historical sites can be captured quickly in full 3D.

- A few images are enough to preserve shape, texture, and lighting.

5. Real Estate and Architecture

- Property walkthroughs become more detailed and natural-looking.

- Architects visualize interiors from ordinary photos.

Advantages Over Traditional Reconstruction Methods

Higher Quality

NeRFs capture subtle surfaces that traditional methods miss:

- Hair

- Fabric folds

- Vegetation

- Glass reflections

More Realistic Rendering

Because NeRFs model the radiance (light), they generate images closer to real photographs.

Less Hardware Needed

NeRFs perform well with:

- Smartphones

- Basic cameras

- Handheld photography

No expensive 3D scanners required.

Flexible Editing

Once a scene is reconstructed with a NeRF, it can be:

- Relit

- Re-rendered

- Viewed from any angle

- Integrated into 3D environments

Challenges and Ongoing Improvements

Even though NeRFs are revolutionary, they still face limitations:

- Training can be slow

- Rendering can require heavy computation

- Dynamic scenes are difficult

- Moving objects cause blurring or artifacts

However, newer tools such as NerfAcc, Instant-NGP, and fast NeRF variants are drastically reducing training and rendering times.

Why NeRFs Represent a Revolution

NeRFs introduce three fundamental changes:

- A shift from geometry-based to light-field-based 3D reconstruction

- A shift from discrete models to continuous neural representations

- A shift from manually designed algorithms to learned scene understanding

This combination enables capabilities never seen before in 3D reconstruction, making NeRFs one of the most influential breakthroughs in modern computer vision.

Final Thoughts

A major change in 3D scene reconstruction is being driven by Neural Radiance Fields. A method that learns how light behaves in space now produces smoother geometry, lifelike textures, and more realistic viewpoints from simple 2D images. A quick look at their advantages shows why NeRFs are reshaping industries from entertainment to robotics and why they are at the center of next-generation 3D technology.