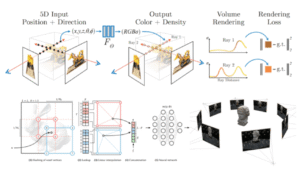

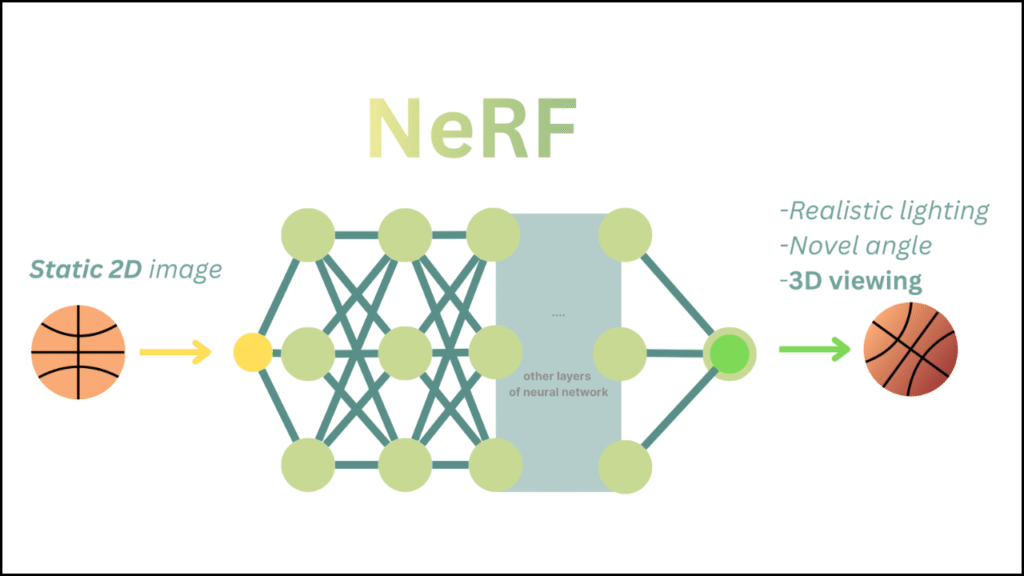

Neural Radiance Fields (NeRF) have become a powerful method for learning realistic 3D scenes from images. High-quality rendering remains the biggest advantage of NeRF models, but slow training and inference limit practical use. NerfAcc solves this problem by accelerating NeRF pipelines inside PyTorch workflows. Faster computation, better resource usage, and smoother integration define its growing relevance in modern neural rendering.

Table of Contents

Overview

| Aspect | Key Detail |

|---|---|

| Goal | Faster NeRF training and inference |

| Framework | PyTorch |

| Core Method | Occupancy-based acceleration |

| Users | Researchers and developers |

What Is NerfAcc

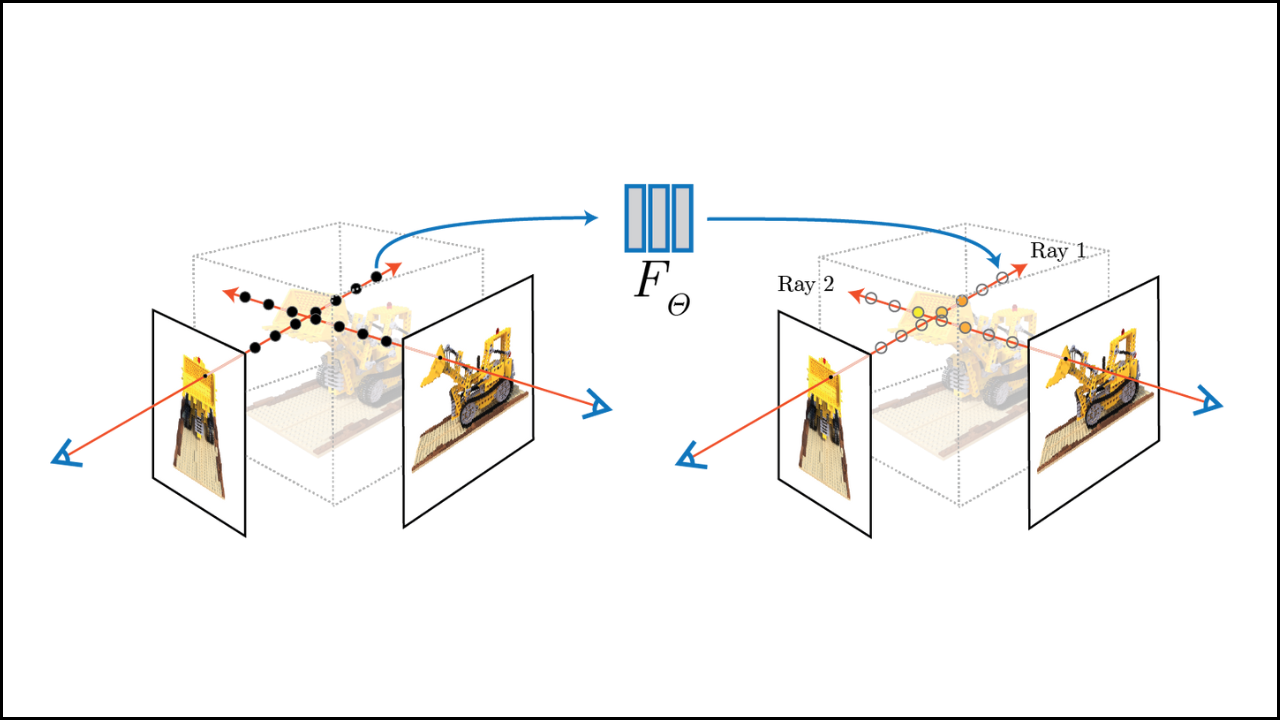

NerfAcc is a lightweight acceleration library built specifically for NeRF-style models. The main idea focuses on reducing wasted computation in empty 3D space. Traditional NeRF approaches evaluate many unnecessary points along rays, even where no scene geometry exists.

NerfAcc introduces spatial awareness into the rendering process. Computation is performed only where the scene actually contains useful information. This targeted evaluation leads to significant performance improvements without lowering output quality.

NeRF Performance Issues

Standard NeRF pipelines suffer from several efficiency problems. Uniform sampling along rays results in thousands of redundant neural network evaluations. Training times often stretch into multiple days for complex scenes.

Inference speed also becomes a concern. High rendering latency makes interactive visualization difficult. Memory usage increases rapidly due to dense sampling, limiting batch sizes, and resolution. These issues create a strong need for acceleration methods like NerfAcc.

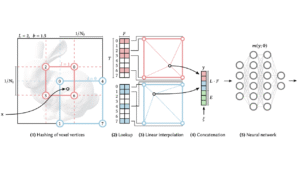

Occupancy Grids

Occupancy grids are the foundation of NerfAcc’s acceleration strategy. Each grid cell represents whether a region of space contains meaningful geometry. During training, this grid updates continuously as the model learns the scene.

Ray marching uses the grid to skip empty cells instantly. Rays only evaluate samples inside occupied regions. Early termination prevents unnecessary computation. As a result, GPU resources focus on surfaces and fine details rather than space.

Ray Sampling

Efficient ray sampling plays a major role in NeRF speed. NerfAcc replaces uniform sampling with adaptive strategies. Step sizes change depending on whether rays travel through empty or occupied regions.

Sparse sampling appears in space, while dense sampling concentrates near geometry. This balance reduces the total number of samples without harming visual quality. Fewer samples also reduce noise during optimization, improving training stability.

PyTorch Support

PyTorch integration makes NerfAcc easy to use in existing workflows. The library works with standard PyTorch tensors and CUDA kernels. Developers can add NerfAcc components without rewriting entire codebases.

Automatic differentiation remains fully supported. Optimizers, learning rate schedulers, and mixed-precision training function as usual. This smooth compatibility encourages adoption in both research and production environments.

Training Speed

Training acceleration is one of the most visible benefits of NerfAcc. Faster ray evaluation allows more iterations per second. Models reach convergence sooner while maintaining strong visual quality.

Reduced sampling noise helps the network learn geometry more effectively. Clearer structures appear earlier during training. Overall training time decreases, enabling faster experimentation and iteration.

Inference Gains

Inference speed matters for visualization and deployment. NerfAcc significantly lowers rendering latency by minimizing ray computations. Batch rendering becomes more efficient, allowing higher-resolution outputs.

Although real-time NeRF rendering remains difficult, NerfAcc brings workflows closer to interactive performance. Scene previews, debugging, and user exploration become more practical.

Memory Use

Lower sampling density directly reduces memory consumption. Occupancy grids remain compact even for large scenes. This efficiency allows higher-resolution training on the same hardware.

Multi-GPU setups also benefit from reduced communication overhead. Scalability improves for large datasets and complex scenes, supporting broader NeRF applications.

Use Cases

Research environments benefit from faster training cycles and quicker experimentation. NerfAcc enables more extensive testing of NeRF variants and rendering strategies.

Industry applications include digital twins, robotics simulation, and immersive content creation. Faster inference improves user experience, while reduced training time shortens development cycles.

Final Analysis

NerfAcc offers a practical and effective solution to NeRF performance limitations. Occupancy-aware sampling, efficient ray marching, and PyTorch-friendly design combine to accelerate both training and inference. Faster convergence, lower memory usage, and smoother rendering make NerfAcc an important tool for scalable neural rendering workflows.

FAQs

Q: What does NerfAcc mainly improve?

A: NerfAcc speeds up NeRF training and inference.

Q: Does NerfAcc reduce rendering quality?

A: NerfAcc maintains quality by skipping space only.

Q: Is NerfAcc suitable for large scenes?

A: NerfAcc supports large scenes through efficient sampling and memory use.