Loss functions guide NeRF models to predict accurate density and color along rays. Custom loss functions allow researchers to emphasize different aspects of the reconstruction, such as high-frequency details, depth consistency, or smoothness. Proper design and integration of these functions can improve convergence speed, rendering quality, and multi-scene generalization.

Table of Contents

Why Custom Loss Functions Matter

- Standard mean squared error (MSE) may underperform in capturing fine details.

- Depth-aware losses improve scene geometry reconstruction.

- Perceptual losses emphasize human-observed image quality.

- Regularization terms prevent overfitting or noisy predictions.

- Multi-objective losses balance multiple aspects like color, depth, and smoothness simultaneously.

Common Types of Custom Losses

| Loss Type | Purpose |

|---|---|

| MSE (Pixel Loss) | Measures the difference between predicted and ground truth RGB values. |

| Depth Loss | Encourages correct depth estimation along rays. |

| Smoothness Loss | Reduces noise and ensures local spatial consistency. |

| Perceptual Loss | Optimizes high-level visual similarity using pretrained networks. |

| Regularization Loss | Penalizes extreme density or color predictions to prevent artifacts. |

Designing a Custom Loss Function

- Start by defining the primary objective: RGB accuracy, depth consistency, or smoothness.

- Combine multiple loss terms with weighted coefficients to balance their influence.

- Use differentiable operations to ensure gradients propagate correctly.

- Test the function on small datasets before full-scale training.

Implementation Example

| Step | Explanation |

|---|---|

| Define Loss Terms | Create functions for MSE, depth, smoothness, or perceptual losses. |

| Weight Losses | Assign scaling factors to each loss term according to importance. |

| Combine Losses | Sum or average the weighted losses to form the final objective. |

| Integrate in Training Loop | Replace standard MSE with the custom loss during backpropagation. |

Example in PyTorch:

def custom_nerf_loss(pred_rgb, true_rgb, pred_depth=None, true_depth=None, alpha=0.1): mse_loss = ((pred_rgb – true_rgb) ** 2).mean() if pred_depth is not None and true_depth is not None: depth_loss = ((pred_depth – true_depth) ** 2).mean() else: depth_loss = 0 total_loss = mse_loss + alpha * depth_loss return total_loss

Balancing Multiple Losses

- Start with equal weights, then adjust based on validation results.

- Monitor convergence of each term to prevent one loss dominating others.

- Consider dynamic weighting strategies where weights evolve during training.

- Avoid overly complex loss functions that significantly increase training time.

Integration with PyTorch Training Loop

| Step | Purpose |

|---|---|

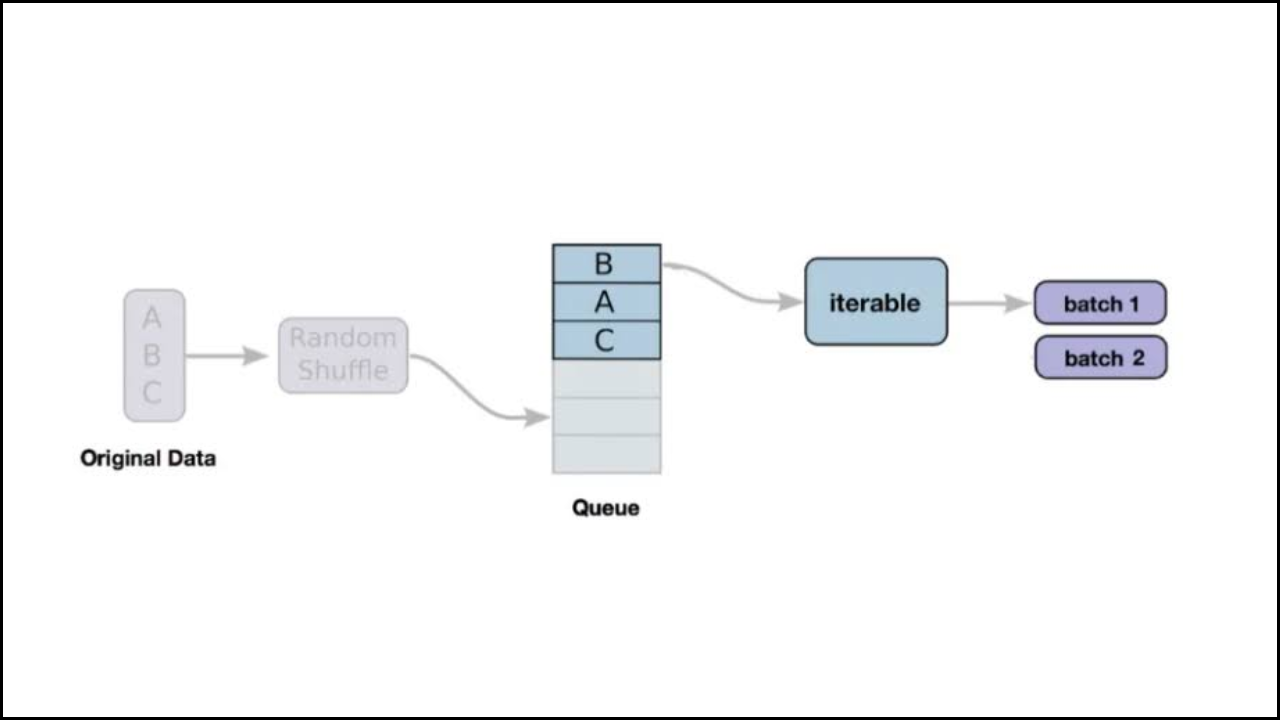

| Load Dataset | Provide RGB images, depth maps, and camera poses. |

| Forward Pass | Compute predicted RGB and depth values from NeRF. |

| Compute Custom Loss | Evaluate loss using combined weighted terms. |

| Backpropagation | Update NeRF parameters using optimizer.step(). |

| Validation Check | Monitor loss and adjust weights if necessary. |

Tips for Effective Custom Loss Usage

- Use smaller learning rates when combining multiple complex losses.

- Regularly visualize intermediate reconstructions to see the effect of each term.

- Normalize input data to prevent scale-related loss imbalance.

- Gradually introduce additional loss terms after initial convergence.

Advanced Strategies

- Perceptual Losses: Use features from pretrained networks (e.g., VGG) to guide high-level image similarity.

- Adversarial Losses: Combine with a discriminator network to encourage photorealistic outputs.

- Geometric Losses: Penalize deviations in predicted 3D structure using normal or occupancy consistency.

- Scene-Adaptive Weighting: Adjust loss weights depending on scene complexity or depth variance.

Common Pitfalls

| Pitfall | Solution |

|---|---|

| Dominant Loss Term | Scale losses or use dynamic weighting. |

| Slow Convergence | Reduce the number of loss terms or tune the learning rate. |

| Overfitting to a Small Dataset | Add regularization and augment data. |

| Gradient Instability | Ensure all loss components are differentiable. |

Evaluating the Effectiveness of Custom Loss Functions

- Quantitative metrics provide insight into how well the model is learning with the custom loss.

- Common evaluation metrics include PSNR (Peak Signal-to-Noise Ratio), SSIM (Structural Similarity Index), and LPIPS (Learned Perceptual Image Patch Similarity).

- Comparing validation metrics between standard MSE and custom loss helps identify improvements in high-frequency detail and depth accuracy.

- Visualization of predicted images alongside ground truth highlights qualitative differences.

- Ablation studies, where individual loss components are removed, show each term’s contribution to overall performance.

- Monitoring gradient norms ensures that none of the loss terms dominate training or destabilize convergence.

| Evaluation Method | Purpose |

|---|---|

| PSNR | Measures overall image reconstruction accuracy. |

| SSIM | Captures structural similarity between predicted and true images. |

| LPIPS | Evaluates perceptual similarity using deep network features. |

| Visual Inspection | Detects fine details, artifacts, and color fidelity. |

| Ablation Study | Assesses the effect of individual loss terms. |

| Gradient Monitoring | Ensures balanced contribution of each loss during training. |

- Iteratively adjusting loss weights based on these evaluations improves convergence speed and image quality.

- Depth-related losses can be validated with 3D point cloud comparisons to detect geometric errors.

- Metrics can be logged per epoch to track trends and detect overfitting early.

Final Analysis

Custom loss functions allow NeRF models to focus on specific reconstruction objectives beyond simple pixel error. Carefully designed combinations of color, depth, smoothness, and perceptual losses improve rendering quality, speed up convergence, and prevent artifacts. Stable integration with PyTorch ensures efficient backpropagation and predictable training behavior across complex scenes.