Neural Radiance Fields, or NeRFs, rely on a scientific model that teaches computers how light behaves in a real scene. The approach converts ordinary photographs into a detailed 3D view, and the science works by learning how color, brightness, and density change at every point in space. The method gives machines the ability to understand geometry and lighting with strong accuracy, making NeRF a major milestone in computer vision and graphics.

Table of Contents

Understanding What a Radiance Field Is

Meaning of Radiance Field

- A radiance field represents how much light travels from each point in space toward the camera.

- The field stores information about color, density, and direction.

- The idea allows NeRF to treat the world as a smooth, continuous function instead of a blocky mesh.

Core Components of a Radiance Field

- Color tells what shade the surface reflects.

- Density tells how solid or transparent each region is.

- Direction affects how reflective or glossy materials look.

- Position defines where the point exists in 3D space.

Key Ingredients of a Radiance Field

| Element | Purpose in NeRF |

|---|---|

| Color | Determines the final pixel shade |

| Density | Defines thickness and surface structure |

| Direction | Controls view-dependent reflections |

| Position | Links each value to a specific 3D point |

| Volume Properties | Handle fog, smoke, and transparent surfaces |

How NeRF Learns Geometry

Sampling Points in Space

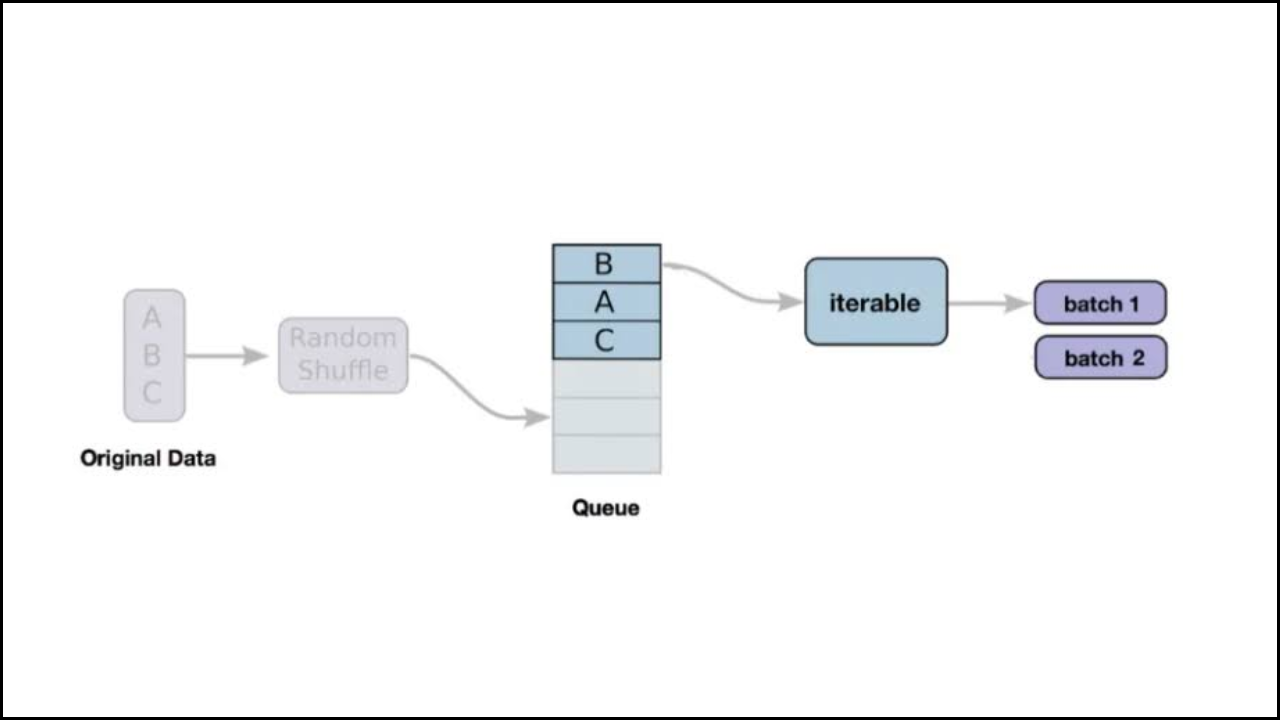

- NeRF sends virtual rays from the camera into the scene.

- Each ray samples many points along its path.

- The model predicts density at every point to identify where objects exist.

Density as a Shape Indicator

- High density means a solid surface, such as walls or objects.

- Low density means space.

- The density curve along each ray forms the basic geometry.

Implicit Surface Representation

- NeRF does not store polygons or meshes.

- Geometry emerges from the density values.

- The continuous representation keeps surfaces smooth.

Why This Creates Accurate Shapes

- The network examines thousands of rays across the scene.

- Each ray contributes tiny geometric clues.

- Combined predictions form a full 3D reconstruction with fine detail.

Geometry Learning in NeRF

| Process | Result |

|---|---|

| Ray Sampling | Identifies where surfaces appear |

| Density Prediction | Builds object thickness and shape |

| Continuous Field | Removes edges and mesh artifacts |

| Multi-View Fusion | Combines clues from different camera angles |

| Implicit Surfaces | Produces smooth and natural geometry |

How NeRF Learns Light Behavior

Volume Rendering Theory

- Light is computed by tracing rays through the radiance field.

- Each point contributes a small amount of color to the final pixel.

- The contributions depend on density and brightness.

View-Dependent Color

- Materials often change appearance with perspective.

- NeRF models this using direction-based encoding.

- The approach handles shiny objects, reflections, and highlights.

Accumulated Light Along Rays

- NeRF collects light from all sampled points.

- The network blends these contributions mathematically.

- Soft shadows and natural gradients emerge as a result.

Light Absorption

- Dense points absorb more light.

- Transparent areas allow rays to pass through.

- The method helps render smoke, fog, or glass realistically.

Light Modeling Features

| Light Concept | How NeRF Uses It |

|---|---|

| Emission | Determines how bright each point appears |

| Absorption | Controls how much light passes through |

| Scattering | Helps create soft transitions |

| Directionality | Produces realistic reflections |

| Ray Integration | Blends all samples into the final pixel color |

How the Neural Network Learns the Radiance Field

Input Encoding

- Positions and directions are converted into high-frequency features.

- Encoding helps the model capture fine shadows and tiny details.

Network Prediction

- The network outputs color and density for each sampled point.

- These predictions improve over many training steps.

Loss Function

- Predicted colors are compared with real pixel colors.

- The difference is minimized during training.

- The process gradually improves realism.

Multi-View Consistency

- NeRF must satisfy all camera observations.

- Points must look correct from every angle.

- Consistency forces the model to learn accurate geometry and lighting.

Why Radiance Fields Allow Realistic 3D Scenes

Continuous Representation

- The scene is defined mathematically, not by fixed shapes.

- Infinite resolution becomes possible in theory.

Accurate Lighting

- NeRF models natural brightness changes.

- Shadows and reflections behave realistically.

Strong Geometry Precision

- Density fields capture tiny edges and curves.

- Surfaces appear smooth even with close-up zoom.

Adaptation Across Materials

- Shiny, matte, transparent, and rough materials are all modeled.

- Scene realism stays high across different objects.

Real-World Areas Benefiting From NeRF’s Science

Visual Effects

- Film studios use realistic lighting for digital scenes.

- Complex reflections and smooth light transitions are important.

Virtual Reality

- Room-scale captures feel lifelike.

- Users can move naturally without distortion.

Robotics

- Robots require detailed maps for navigation.

- NeRF gives a strong geometric understanding.

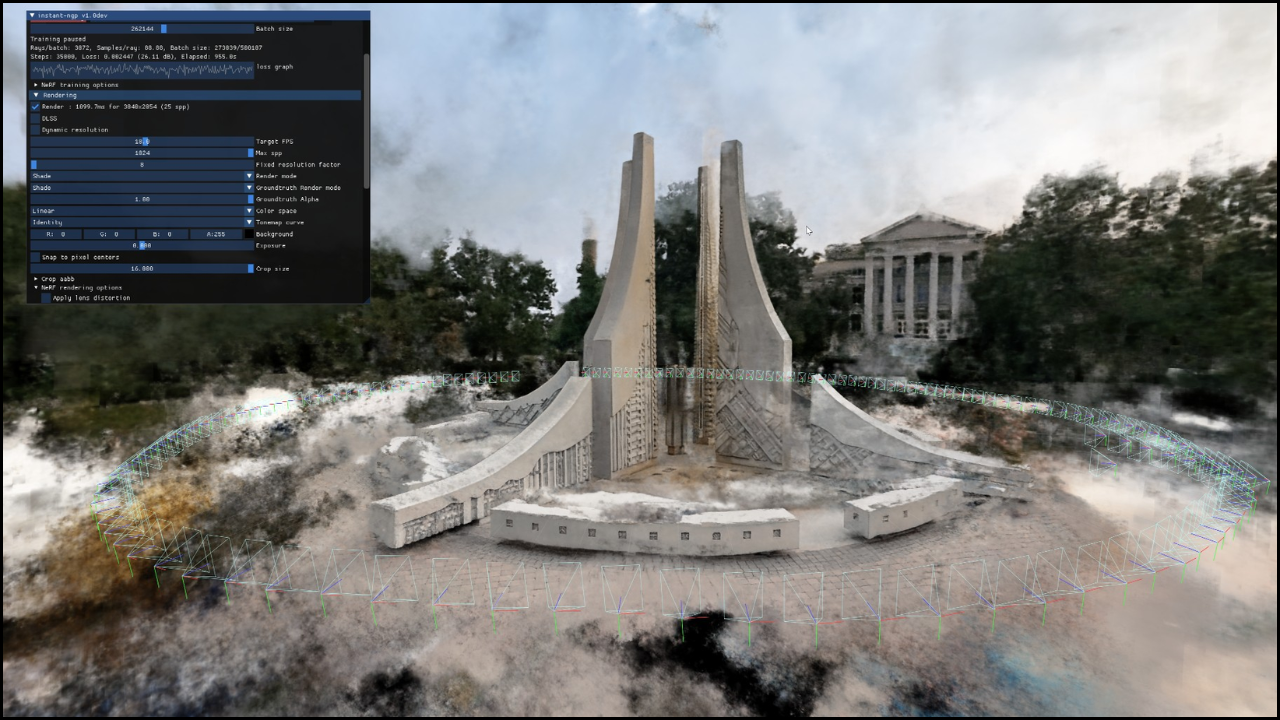

Cultural Preservation

- Historical sites are reconstructed with precise detail.

- Lighting simulations help visualize original environments.

In Summary

Neural Radiance Fields learn light and geometry through a scientific process that combines ray sampling, density prediction, and volumetric rendering. The approach allows machines to reconstruct realistic 3D scenes using simple photographs, and the combination of continuous fields and view-dependent color makes NeRF a powerful tool for modern graphics, VR, and research. The science behind radiance fields continues to evolve, offering new ways to capture and recreate the real world.